Aortic stenosis might look straightforward on paper, but in reality it’s one of the most misclassified conditions in echo. Getting consistent measurements depends heavily on operator skill and which views get selected. That’s exactly what Dr. Roxana Botan from the Heart Center at University Hospital Dresden set out to test with her team in a large validation study presented at ESC.

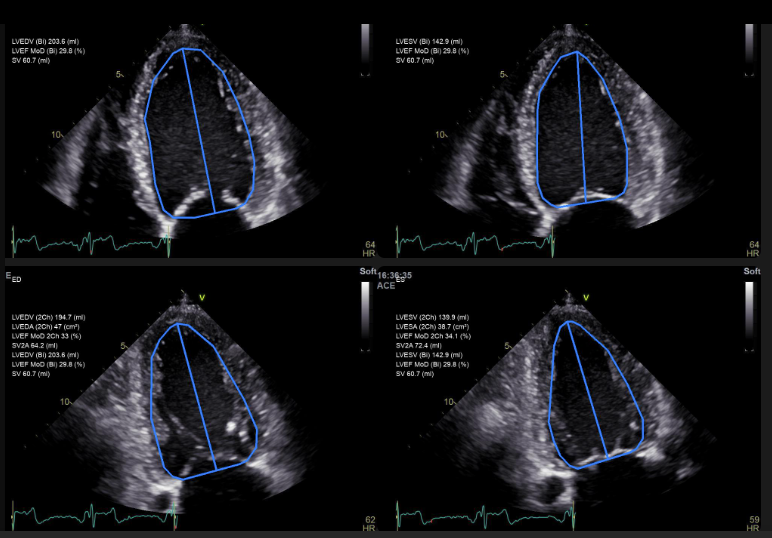

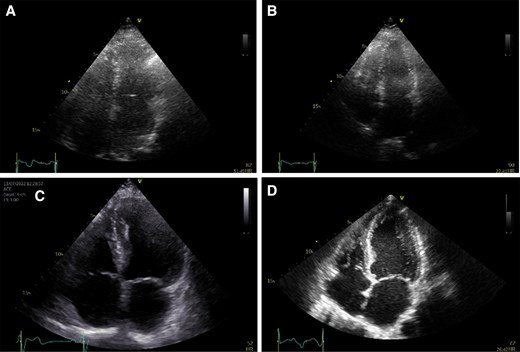

They pulled data from 1,245 patients and ran it through a fully automated pipeline. The AI selected the echo views on its own, then measured the key aortic parameters — the ones clinicians usually spend the most time double-checking. Once the outputs were in, the team compared them to clinician-measured values.

The correlation wasn’t just “acceptable,” it was strong across the board (R roughly 0.81 to 0.98). That’s the kind of alignment where you stop asking whether the model works and start asking where it fits into the workflow.

The value here isn’t replacing anyone. It’s standardization. Aortic stenosis grading swings a lot between operators. If an automated model can reduce that variability and tighten the workflow, clinicians get more reliable inputs and fewer borderline cases fall into diagnostic limbo.

The full study breaks down the dataset, methods, and validation details if you want to go deeper.

Read the full study here

Disclaimer: Funded by the European Union. Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or European Innovation Council and SMEs Executive Agency. Neither the European Union nor the granting authority can be held responsible for them.